AI and Computer Vision

Young woman and smart phone with facial recognition (RyanKing999, iStockphoto)

Young woman and smart phone with facial recognition (RyanKing999, iStockphoto)

How does this align with my curriculum?

| Grade | Course | Topic |

|---|

Learn about how computers see and learn to recognize objects and human faces.

Visual and Facial Recognition Technology

Throughout history, humans have developed machines to do work for us. Recently, this has included machines that imitate our senses, like our vision. Vision recognition technologies are technologies that can see and label things. These let machines, robots, and apps see and understand the world as we see it.

Computer vision (CV) is a type of computer engineering. It involves teaching computers to "see" digital images like photos and videos. Engineers who work in this field have a variety of tasks. One thing they do is to find ways to use digital cameras with devices and computers. They also find ways to teach computers to recognize images and videos. This is done through coding or machine learning.

There are different types of computer vision. They depend on what the computer is trying to identify. The computer may look for text, images or faces. We will look at these three in detail.

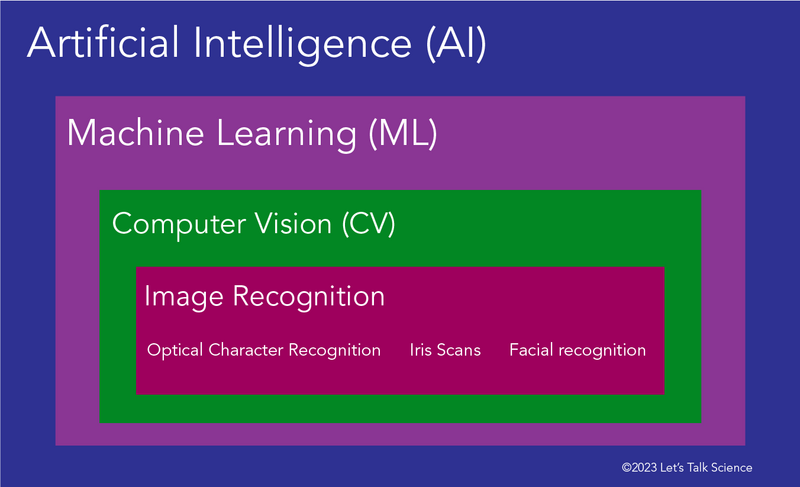

Image - Text Version

Shown is a colour diagram of labelled rectangles nested inside each other. The largest rectangle, on the outside, is blue and labelled “Artificial Intelligence (AI).” Inside that is a smaller purple one labelled “Machine Learning (ML).” Inside that is an even smaller green one labelled “Computer Vision (CV).” The smallest, innermost rectangle is dark pink. It is labelled “Image Recognition” in large letters. Three smaller labels below read: “Optical Character Recognition,” “Iris Scans,” and “Facial Recognition.”

Optical Character Recognition

Optical character recognition (OCR) is a technology used to look for text. The text may be handwritten or in typed documents.

Let’s see how it works for handwriting.

The first step in OCR is taking pictures of people's handwriting. Then, people scan them into a computer. Next, people match the handwritten text with the characters on a computer. A character is any letter, number, space, punctuation mark, or symbol. This teaches the computer which handwriting goes with which character. Now the computer will be able to identify and match handwriting with text.

This is an example of supervised machine learning. Supervised machine learning involves giving data, like images, labels, and file names. In OCR, machines learn to identify characters using many labelled images of handwritten letters. The machine can then look for patterns in all the images of the same character.

Let’s take the example of the character one (1). Rules can be set to look for the following patterns in how humans write the character 1.

Pattern rules:

- Often found close to other numerals.

- A long straight vertical line, e.g. l

- An optional short line that hangs from the top backward at 45 degrees, e.g. 1

- An optional short horizontal line centred on the bottom, e.g. 1

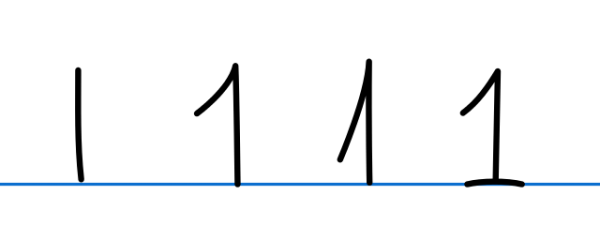

Image - Text Version

Shown is a colour illustration of the number one, written four different ways. The numbers are handwritten in black on a blue line. The first is a single vertical line. The second is a vertical line with a short, curved line pointing from the top, down and to the left. The third is similar to the second, but the short line is longer, and angled lower. The last has got a short line on top, similar to the first. It also has a short, horizontal line across the bottom, like a base.

Try this!

How would you describe a pattern for the numeral 3? or the numeral 9?

These types of pattern rules can be written as computer code. The code includes a step by step set of instructions, or algorithm. Once a computer has a code, then an OCR program can translate handwriting into computer text. Some computer vision models can learn and record the pattern rules themselves. Then, when they see a new character, they analyse it the same way and find which group it matches with.

Try this!

You can try it yourself using the program on this website.

OCR technologies are now found in some smartphone apps. These apps take photos of your handwritten notes. Then they convert them into digital text. Being able to handwrite our notes and turn them into text is much easier than typing on a small device. Turning visual information, like your handwritten notes, into text data has many advantages. Text data can be searchable, it can be put into categories, and it takes up a lot less memory on your phone or computer!

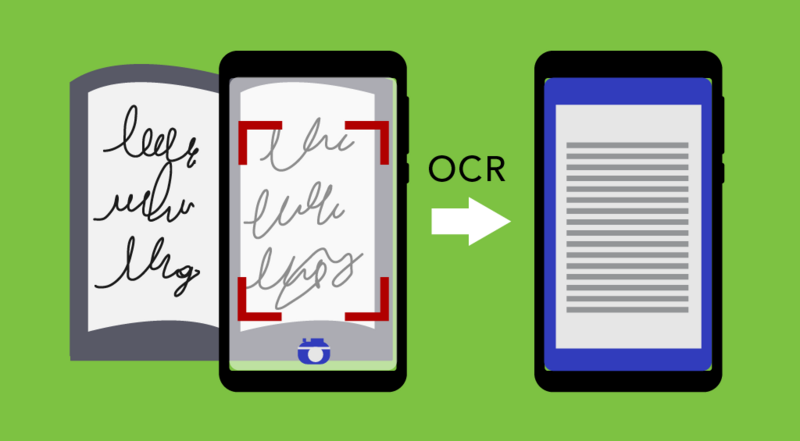

Image - Text Version

Shown is a colour illustration of a smartphone taking a photo of handwriting, then displaying a typed document. The same smartphone is shown twice. On the left of the illustration, it is shown overtop of an open notebook filled with handwriting. The smartphone screen shows that a photo is being taken. To the right, a white arrow labelled “OCR” points to the same smartphone displaying a neatly typed document. Neither the handwriting or typing is legible.

Object and Visual Recognition

Many manufacturing processes involve machines and robotic systems that detect and recognize objects. Object detection can be as simple as a sensor that uses light to see if an item has passed by. Think of a labelling machine. It detects if a box moving along a conveyor belt is in the correct position. When the system ‘sees’ that the package is in the right location, it prints a label on it.

Today, people are developing even more complex visual recognition systems for robots. These let the robots better identify and handle objects. It is important that these systems come close to matching human abilities. For example, a robot needs to recognize and adjust its grip one way for a paper cup and a different way for a glass cup.

Simple visual object detection systems detect where something is. This is like the back-up camera in a car. It uses object detection sensors and cameras to detect objects. But it doesn't tell the driver what the objects are.

Image recognition systems figure out what objects are. This is one of the most important systems in autonomous cars. Like cars with sensors, autonomous cars need to be able to detect objects. But they also need to decide what to do, depending on the object and situation. For example, if the car recognizes a stop sign, it needs to stop. But if a car detects a person, it needs to analyze where that person is and what they’re doing. Is the person safely on the sidewalk? Is the person crossing the street? You can imagine that this system needs to be really good at what it does!

Image - Text Version

Shown is a colour photograph of cars on a highway with graphics of blue circles and rectangles. A red car in the foreground is in focus. Blue circles radiate on the pavement around it. This spreads to all the road surfaces in the photo, including the opposite lanes. Each of the other vehicles on the highway are surrounded by white rectangles.

Autonomous cars are not the only systems that use image recognition. The smartphone app PlantNet is another example. It lets people find information about plants. Using your phone, you take a picture of the plant. The image recognition system compares your image to many other images of plants it already knows. It then suggests what your plant is. Leafsnap and Florist are similar apps. They help people to identify trees and flowers from images or their camera.

Facial Recognition Technologies

Facial Recognition Technology (FRT) is a technology that identifies human faces. The process it uses is like the way humans recognize each other. A computer's facial recognition system is like your facial recognition system. You see someone's face with your eyes. A smartphone takes an image of someone's face with its camera. Your brain takes the features of the face and stores it in your memory. This is what lets you remember people later. A computer does the same using algorithms.

Faces are unique. Like a fingerprint, we can measure and compare them. The term for measuring biological features is biometrics. Facial biometric software measures and maps parts of a face. This includes things like the shape and colour of eyes, noses, mouths and chins. We call these measurements nodal points. A geometric map of a person's face needs about 80 nodal points.

Image - Text Version

Shown is a colour illustration of three photographs of the same person, overlaid with white dots, then lines connecting the dots. The photographs are displayed as if on a computer screen. Each is the same photo, with different additions. The person in the photos is looking straight at the camera. They have pale skin, shoulder length blond hair, and a green t-shirt. In the first image, the person’s face is bracketed by four red lines indicating corners. In the second, their face is covered with small white dots, outlining their features. In the third, those dots are connected with short white lines.

The image and nodal points are then written as code. We call this code the faceprint or facial signature. Once a faceprint exists, a computer can compare it to other faceprint codes in a database of pictures. Faceprints are pretty unique, but they are not as unique as an iris scan or iris print. An iris scan is an image of a person's iris. The iris is the coloured part of your eye. Your iris is unique to you, like your fingerprint. This makes it a good means of identification.

Did you know?

Iris scanners use around 240 nodal points.

Many areas now use FRTs. The main area is security. Some smartphones and locks use faceprints or iris prints instead of passwords. The advantage of using your face is that you don't need to remember your password!

Law enforcement can use FRT to identify criminals from surveillance video footage. Governments could use FRT to confirm a person's identification. They could also use it when issuing passports, or at borders and airport security. Unlike your face, your iris doesn't change over time. So it can be used to identify you throughout your life. But iris prints are not as easy to take as faceprints.

Image - Text Version

Shown is a colour photograph of people in an airport, overlaid with squares and labels around their faces. Six people are out of focus in the background. They are lined up along a luggage carousel, watching for their bags. The faces of the two people on the right are outlined with red squares with labels underneath. The faces of the four people on the left have yellow squares and labels. The labels are too small to read. Two suitcases are on a conveyor belt in the foreground.

Concerns About FRT

FRT is pretty good, but it is not always accurate. One problem is that the pictures and videos we take may not be clear. Photos taken in poor lighting can affect the ability of FRT to make a positive match. Changes in glasses, jewellery, and facial hair can also affect FRT. In those situations, the matching results can be wrong. New software for both 2D and 3D images captured from video are improving FRT. Some systems even allow for changes in hair or things people use to disguise themselves. These improvements will help make FRT more accurate.

Another issue with FRT is the quality of the data given to computers. Algorithms used to analyze biometrics are given thousands of pictures of people. But sometimes computers are not fed enough data on certain groups of people.These include people who are visible minorities in North America and Europe. This problem can lead to false identification. If used in law enforcement, false identification can have serious impacts on people’s lives. This is why we need to be careful when using technologies like FRT for identifying people.

Privacy is a big concern when it comes to FRT. What we look like is a big part of our identity. In some cases, we are okay with others having images of us. This includes groups like the government who provide us with photo identification. What we do not want is people using images of us without our knowledge or permission. For example, some cities in China use FRT for shaming people. The names and pictures of people who break the law are shown on big screens. But in North America, some cities are already banning facial recognition.

One place you need to be careful of FRT is on social media. Did you know that when you post a picture on social media, you are giving the social media company permission to use it for their own purposes? Probably not. FRT allows these companies to collect and match faces with names. What they do with this information is not always clear.

More and more object and vision recognition systems are coming into our lives. These technologies can provide us with security and let us do things we could not do before. But we need to be aware that these technologies could also affect our freedom and our privacy. It is up to you to control how much information you share about yourself. This includes your face.

There are some things you can do. You can be thoughtful about who takes pictures of you and where they are posted. And you should always read the privacy policy for any social media platform you use. You should also pay attention to the news about your country’s regulations on privacy. Being an informed citizen is always a smart choice!

Let’s Talk Science appreciates the contributions of Melissa Valdez Technology Consultant from AI & Quantum for revisions to this backgrounder.

Learn More

Deep Learning for Robots: Learning from Large-Scale Interaction

On this page with multiple videos, you can learn about a real project that uses machine learning and computer vision so that robotic arms learn to correctly recognize and adapt their grasp to different objects.

Computer Vision: Crash Course Computer Science #35 (2017)

This Crash Course video (11:09 min.) from PBS explains what computer vision is and how it works.

What facial recognition steals from us

This video helps understand how facial recognition works and its uses and dangers.

What’s Going On With Facial Recognition?

This video (7:31 min.) by Untangled presents some concerns of facial recognition.

References

AI Multiple. (2021) Image Recognition in 2021: In-depth Guide.

Bonsor, K. & Johnson, R. (n.d.) How Facial Recognition Systems Work. How Stuff Works.

Electronic identification (n.d.) (2020) Face Recognition: how it works and its safety.

Marutitech. (n.d.). What is the Working of Image Recognition and How it is Used?

The Next Web (n.d.) A beginner’s guide to AI: Computer vision and image recognition

Panda Security. (2019, October) The Complete Guide to Facial Recognition Technology

Symanovich, S. (2019, February 8th). How does facial recognition work? NortonLifeLock.