AI and Personal Vehicles

Cockpit of an autonomous car (baza178, iStockphoto)

Cockpit of an autonomous car (baza178, iStockphoto)

How does this align with my curriculum?

| Grade | Course | Topic |

|---|

Learn how artificial intelligence systems are integrated in the vehicles we drive.

Artificial Intelligence (AI) may seem new. But AI apps have been used in transportation for a while. Many modern vehicles use a Global Positioning System (GPS). This uses data from satellites to figure out where the vehicle is on Earth. Mapping algorithms use AI to determine the best way to get from point A to point B.

To do this, AI systems have learned to predict the best routes from huge amounts of data. Then they combine this data with real-time information from users. This includes things like how fast they drive along the route. These two types of data can give people accurate and precise information about their trip. The AI can even help drivers get around traffic and avoid construction.

Image - Text Version

Shown is a colour photograph of a map on a small screen attached to a car windshield. The screen is in focus in the foreground. It is attached to the glass above the dashboard using a suction cup. The map shows the road ahead with a thick red line leading to the horizon. Text boxes on the left of the screen show a time, a speed, and a large arrow pointing up, with the label “11 km.” Other text is too small to read. In the background, the road, the back of another car, and a building are all out of focus. Drops of rain are visible on the windshield.

Did you know?

Machine Learning (ML) is a type of AI. It is used to develop most transportation systems that use AI.

Many of the safety features in modern vehicles use AI. One example is driver assistance. Driver assistance systems help alert drivers to possible dangers. This could be something like beeping when a car is drifting out of its lane. It does this using a variety of sensors, including cameras and infrared sensors.

Some systems also help with the task of driving. This can include specific functions, like control systems that adjust the vehicle's speed or steering. It can also include more general functions, like using machine learning models to provide decisions based on different traffic conditions. All of these functions send data to a central data hub. Then that information is used as more training data for future models.

AI and Traffic Monitoring

No one likes being stuck in traffic! So city planners are always looking for ways to improve the flow of vehicles on roads. Installing sensors on traffic lights can help. The sensors send data to a large remote database. The data is then used to build different light-timing scenarios, which are analyzed to determine the best settings. City planners can also use machine learning to design better road systems. This could include things like building roundabouts instead of traffic lights.

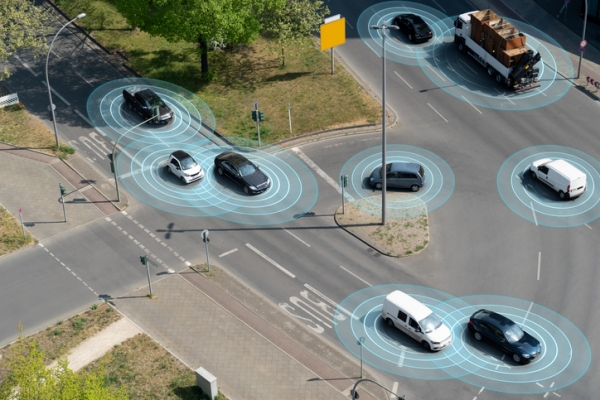

Image - Text Version

Shown is a colour photograph of cars at a large intersection, with blue concentric circles around them. Different sizes and shapes of vehicles are moving in several directions, through a complex intersection with lights, stop signs, and turning lanes. Each one is surrounded by four or five pale blue, concentric circles on the pavement under them.

AI and Road Safety

Did you know that more than 1 700 people die from car accidents each year in Canada? When looking at the whole world, this number jumps to approximately 1.3 million people. And that is not counting the more than 20 million people who suffer non-fatal injuries each year.

Three common causes of car accidents are speeding, impaired driving and distracted driving. To improve road safety, we can use AI systems to identify people who are doing these things. An AI system can look for patterns in people's driving, both good and bad. We can then teach the systems to look for certain things that are dangerous, like speeding.

Did you know?

Robocar, the fastest autonomous car, reached a speed of 282.42 km/h!

AI and Self-Driving Vehicles

Unlike humans, machines do not do reckless and dangerous things. This led people to wonder if self-driving, or autonomous, vehicles could make our roads safer.

Safety is the most important reason people are developing self-driving cars. But it is not the only factor. Time is another factor. Imagine if people could use the time spent driving for other more fun or more productive tasks.

Did you know?

Driverless vehicles might seem like a solution to reduce traffic. But a study has shown that people who use driverless vehicles could actually spend more time on the road! This is not good news for the environment.

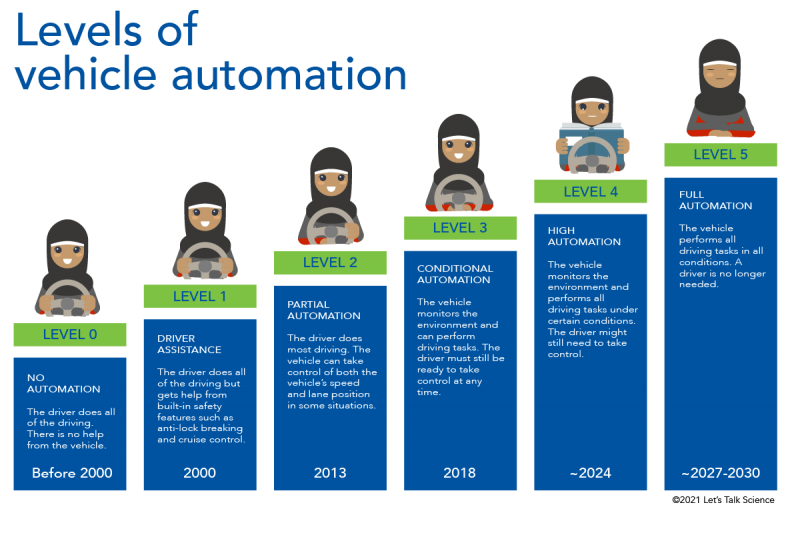

When it comes to cars, there are different levels of autonomy. Most modern vehicles have some features of Stage 2 automation. Some new cars even have Stage 3 or 4.

Image - Text Version

Shown is a colour infographic with six bars, rising in height from “No Automation” to “Full Automation.” The title “Levels of vehicle automation,” is in large letters in the top left corner. Below, each blue bar has an illustration of a driver in a car, a green label, a date, and a description. The first bar is labelled “Level 0,” titled “No Automation,” and dated “Before 2000.” The text inside reads: “The driver does all of the driving. There is no help from the vehicle.” In the illustration above, the driver has both hands on the steering wheel. The second bar is “Level 1,Driver Assistance, 2000.” The text reads: “The driver does all of the driving but gets help from built-in safety features such as anti-lock braking and cruise control.” Above, the driver still has both hands on the wheel. The third is “Level 2, Partial Automation, 2013.” The text reads: “The driver does most of the driving. The vehicle can take control of both the vehicle’s speed and lane position in some situations.” Above, the driver has only one hand on the steering wheel. The fourth is “Level 3, Conditional Automation, 2018.” The text reads: “The vehicle monitors the environment and can perform driving tasks. The driver must still be ready to take control at any time.” Above, the driver is still behind the wheel but they have both hands in their lap, The fifth is “Level 4, High Automation, ~2024.” The text reads: “The vehicle monitors the environment and performs all driving tasks under certain conditions. The driver might still need to take control.” Above, the driver is still behind the wheel, but they are reading a book. The sixth bar is “Level 5, Full Automation, ~2027-2030.” The text reads: “The vehicle performs all driving tasks in all conditions. A driver is no longer needed.” Above, the driver is no longer behind the wheel, but sitting with their arms folded and their eyes closed.

These cars can drive themselves under certain conditions, such as on the highway. It is important to remember that this technology is still new, and not perfect. People still need to keep their eyes on the road while driving in autonomous vehicles.

How do self-driving vehicles work?

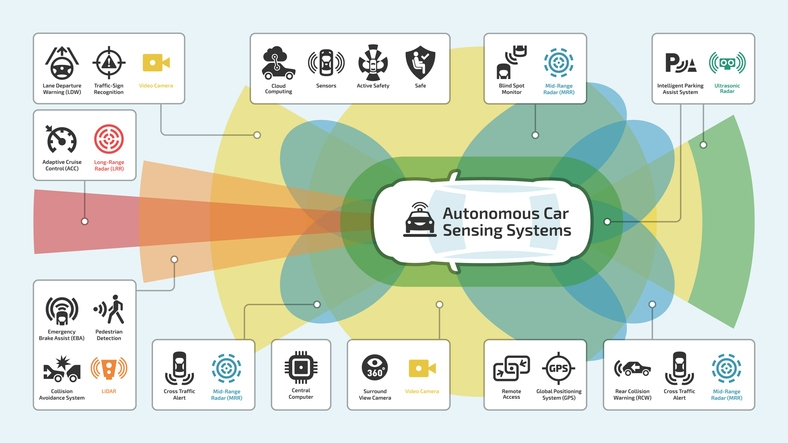

To drive themselves, cars need both hardware and software. The hardware is a set of sensors and mechanical parts. It allows the car to sense its environment and provide data for the car’s computer. It is like the driver’s eyes, hands and legs. Software is computer programming. It allows the car’s computer to make decisions. It is like the driver's brain.

Autonomous cars use many kinds of technologies to sense their environment. This includes high-definition cameras, ultrasonic sensors, radar and LIDAR. Radar uses radio waves to detect objects. LIDAR is like radar except that it uses pulses of light to detect objects. These let the car detect traffic lights, people riding bikes, or even a squirrel crossing the street! They are especially useful when weather conditions reduce visibility.

Image - Text Version

Shown is a colour infographic of a car with different coloured shapes around it, labelled with information. The car is shown from above, near the centre of the image. It is labelled “Autonomous Car Sensing Systems.” Starting at the front of the car, a long, pink wedge shape extends from the middle of the bumper. This is labelled “Adaptive Cruise Control and Long Range Radar.” A shorter, wider orange wedge in the same position is labelled “Emergency Brake Assist, Pedestrian Detection, Collision Avoidance System, and LIDAR.” An even shorter, wider yellow wedge here fans out almost to the top and bottom of the page. This is labelled “Lane Departure Warning, Traffic Sign Recognition, and Video Camera.” Two blue ovals extend around the headlights of the car. These are labelled “Cross Traffic Alert and Mid-Range Radar.” A long green capsule shape surrounds the edge of the car. This is labelled “Intelligent Parking Assist System, and Ultrasonic Radar.” Outside this, a large yellow circle stretches the height of the image. This is labelled “360 Degree Surround View Camera and Video Camera. Near the back of the car, two large blue ovals extend around the side doors. These are labelled “Blind Spot Monitor and Mid Range Radar.” Two smaller blue ovals extend around the tail lights of the car. These are labelled “Rear Collision Warning, Cross Traffic Alert, and Mid Range Radar. Two wedge shapes extend from the middle of the back bumper. The largest one is green and labelled “Intelligent Parking System and Ultrasonic Radar.” The smaller yellow wedge is not labelled. Another label, unconnected to any part of the image, reads “Central Computer.”

The vehicle’s software also uses information from GPS. This includes where the car is, and information like speed limits. This is a lot of information. Which is why an autonomous car needs a powerful computer. This computer must also process all this information very quickly. Delays in deciding how to move the car could be very dangerous!

Programming Driverless Vehicles

We once thought that by now, everyone would be using driverless cars. So, why is this not happening? It is pretty simple. Creating machines that can make decisions for themselves in a world of humans is tricky.

Sometimes when driving, a driver finds themselves in a difficult situation. For example, a driver suddenly sees a coyote standing in the middle of the road.

Image - Text Version

Shown is a colour photograph of an animal standing on concrete with yellow lines in the background. The animal is looking intently down the road. It looks a bit like a dog, but larger. It has narrow eyes, pointed ears, and thick fur. Its paws are on cracked grey concrete. Two yellow lines are painted across this, in the top right corner of the image.

On the side of the road is a deep ditch. The driver hopes the animal will run off, but it is not moving. The driver will not be able to stop in time. Should the driver swerve to avoid hitting the coyote? If they do, they might end up harming themselves and their car by going into the ditch. Or should they hit the coyote? If they do, the coyote may die, but the person and their car would be okay. What would you do?

If you think that making a decision like that is hard, imagine trying to create a computer program to do it! This is exactly what AI engineers are working on. Going back to the example of the coyote, do you think that everyone made the same decision as you? How you made your decision depends on your values. In other words, what you think is important.

Not everyone thinks the same things are important. A study of data gathered through MIT’s moral machine proved this.

The moral machine is a set of car accident scenarios in which people decide what they would do, given a choice. You can try it yourself using the link above. The study found that people around the world made similar decisions. People preferred to save people rather than animals. They preferred to save more lives over fewer lives. And they preferred to save children instead of adults. They also noticed some differences between countries. These likely have to do with what things people in a certain country value. For example, some countries value their elders more than others.

A related issue to this is bias in the training data used to develop ML models. ML models are only as good as the data that goes into them. One example of this is the data used to train the systems self-driving cars use to detect and avoid pedestrians. The datasets used to train these systems need to be extremely large and diverse to cover every possible size, shape, and skin tone of human beings. If they aren't diverse enough, we could end up with systems that are better at identifying pedestrians with some skin tones than others. This could mean certain groups of people are in danger, based on their skin tone or other factors.

In summary

The role of AI in personal vehicles is increasing. Someday these vehicles will probably be the norm. The next generation of people may not even learn how to drive!

Let’s Talk Science appreciates the contributions of Melissa Valdez Technology Consultant from AI & Quantum for revisions to this backgrounder.

Learn More

How does GPS work? (2014)

This video (2:43 min.), narrated by a child, explains how GPS works.

How Self-Driving Cars Work? (2019)

In this video (7:07 min.), learn more about how self-driving cars work and how they’re going to change the future.

Autonomous car goes for speed record (2019)

In this video (2:58 min.), see robocar, the fastest autonomous car, in action.

The ethical dilemma of self-driving cars (2015)

This video (4:15 min.) by TedEd presents the ethical dilemmas of self-driving cars by using concrete examples.

References

Awad, E., Dsouza, S., Kim, R. et al. The Moral Machine experiment. Nature 563, 59–64 (2018). https://doi.org/10.1038/s41586-018-0637-6

Hendrickson, J. (2020, January 3). What Are the Different Self-Driving Car “Levels” of Autonomy?. How-To Geek.

IEEE Spectrum. (n.d.). Accelerating Autonomous Vehicle Technology.

Jensen, C. & Jensen, C. (2017, February 9). States Must Prepare For Human Drivers Mixing It Up With Autonomous Vehicles. Forbes.

Rivelli, E. (2020, June 30). How Do Self-Driving Cars Work and What Problems Remain?. The Simple Dollar.

Schroer, A. (2019, December 19). Artificial Intelligence in Cars Powers an AI Revolution in the Auto Industry. Built In.

TED-Ed. [TED-Ed]. (2019, May 13). How do self-driving cars “see”? - Sajan Saini. [Video]. Youtube.